Recruiters, please stop

This is a post I wanted to make a long time ago since I changed my job modality (from working with a company to working as a consultant).

Before that change, I made sure I didn't want to show myself as available for work on LinkedIn. I even did that on Upwork, because in February I had moved into a new full time job and I didn't want to receive any contracts or requests, as I would definitely not be able to help anyone and I had a new reputation to build.

Now, forwarding to September, I am now constantly getting a lot of job position interview offers. I haven't changed my LinkedIn since February, and I will still say: I'm not available for work. I am already engaged with another company and I'm totally ensuring my dedication into working for that company, even if they try to boot me one day and I can't do anything (which is possible, as I'm a contractor right now).

Today I watched a video from a Brazilian entrepreneur, and he mentions about this whole thing I'm talking about. Recruiters just see your profile, see your certifications and think "hey, I want that guy". They forget to see I'm currently working for a company, which is somewhat a very shitty move and a disconsideration for people that has lost their jobs in recent months and now are struggling to find something.

So please, you'd rather contact someone that really needs a job. I am not available. Thanks.

My Thelio R2 finally came

Disclaimer: I haven't been paid to provide this review. I am doing it because there aren't that many reviews for System76 products, including the normal Thelio.

Recently I ordered a Thelio computer with the following specs:

- AMD 3rd Gen Ryzen 5 3600X (3.8 up to 4.4 GHz - 6 Cores - 12 Threads) with PCIe 4.0

- 8GB of memory (I am going to buy more separately, as the price offered at the System76 webpage was oddly higher than the prices here)

- 120Gb SSD (Same situation as on memory)

- 8Gb Radeon RX 5700 XT with 2560 Stream Processors

The reason I went with something like System76 is because of their proposal of open source hardware. It is a bit expensive than building your own computer or even getting a Mac device with similar specs. Plus, at least here where I live (which is outside of US), PC builders are not used to create small computers, so it is almost impossible to find small cases. At least I was saving money to buy a new computer, as my Thinkpad T430 was showing its sign of age when running Packer.

Unboxing

The good thing is that the packaging was a box inside another box. When I saw the courier guy delivering the box in the wrong way, I got scared for a while, but in the end, everything was fine.

Opening the box, the relief came and I saw the main box (the one with the product) surrounded by foam structures. This might be helpful to avoid any vibrations or such.

Taking off the box from the external cardboard box, you can see a very nice packaging. Even a friend that studied Industrial Design praised it, which is cool. It shows the Open Source Hardware sign, plus a cool picture from the moon. Opening that box, I was greeted with a "Welcome to Thelio" message.

Now, you could see the top of the case and a postal card, which is a common gift from System76 with their products. I decided to lift the computer from the packaging and take more pictures of the welcome messages of the box.

Product description

I won't be detailed as I'm not a hardware expert, plus there might be a part 2 with the internals.

The case is beautiful. It has a side of wood on the right (real wood by the way) and a very detailed side on the left showing the Rocky Mountains from Colorado. On the front, you will find the System76 logo on the bottom and the power button above.

The back is where everything is located. In my case, on the top left, you can find four USB 3.2 Gen 2 ports (definitely the blue ones, and I suppose the white would be the fourth), a USB 3.2 Gen 2 Type-A (the red one), two DisplayPorts and a HDMI port. Also, there is a USB-C and an Ethernet port.

Below the middle section, you will find the Mic, Line out and Line in entrances (not marked, unfortunately), and, depending of your GPU, a section with the corresponding ports.

If you see the fan part, it has something like the solar system engraved on it (cool huh?).

Finally connecting the whole thing, everything run A-OK. You are greeted with a System76 boot screen (It uses Coreboot, double yay) and for the first set up, it will boot the installation of Pop OS!. Some people say they have heard the fan noisy when running the installation, but I heard nothing.

When I get into changing components, which would be in a few days, I can post its internals, so you can have a look at it.

My complaints so far

Honestly, I have no complaints on the hardware part. Everything was recognized as expected, and as I said previously. I just want a computer and I don't want to nitpick on the hardware part because I really lack the knowledge on doing that right. Now, my nitpick is with Pop OS!. This will be mostly a complaint against Ubuntu and derivatives though, and it will deserve another post for sure.

When starting the OS, I was greeted with GNOME. I have been using GNOME for too long and I don't have any problems with it, specially because I use Fedora on my Thinkpad (which also uses GNOME). The shortcuts and whole functioning are totally different. Like, if I move the mouse to the top left corner, it doesn't show the application section. If I do Super+Up, it doesn't expand my screen. Damn, I really hope that won't be difficult to replicate, although I feel that shouldn't be the thing.

Then, when connecting my headphones, Pop OS! didn't detect it. I was pretty much worried, as I was thinking I needed to buy extras just to plug any analogic audio device on it. Luckily, it seems System76 have a support page on how to troubleshoot the audio issues. That's good, but it makes me think how incomplete is Pop OS! because needs to be tweaked to get something basic working, even though the same System76 is the one that develops it and, well, it came on one of their devices. It's like a Macbook not able to read any connected USB device and you need to do some tweaking to get that running.

I still need to play a little more with it, so this post might be filled of updates. I was thinking of getting NixOS on it (have been playing a lot with Nix and Home Manager), but honestly, I need a daily device with good support. I might try it on my laptop though, if my experience with OpenBSD fails miserably.

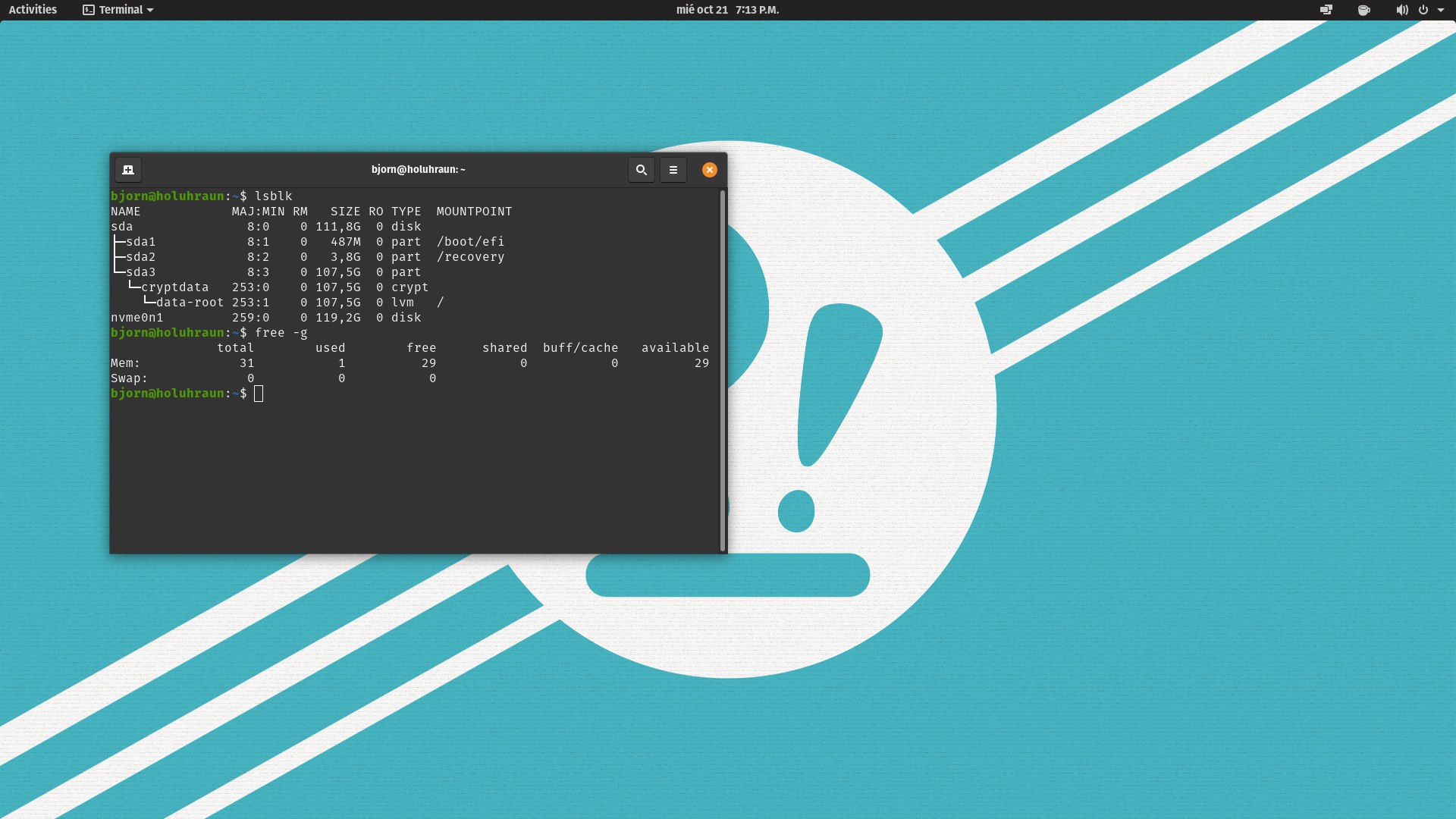

UPDATE (21-10-2020)

Yesterday I had the chance to open the Thelio and add the RAM and extra storage to it. To the My complaints so far section, I want to add the lack of documentation for the thelio-r2. I ended up unmounting the GPU just to find there was already an NVM mounted there (not even mad, I thought it came with an SSD), but there was no second slot. By mere luck, I found it on the right side (the one covered with the wood panel).

I had a struggle with inserting the RAM, but it was my fault, as I'm not a careful person. I needed to accomodate a cable in front of the RAM, but no issues so far. In the end, everything was recognized properly.

Something good: I reinstalled Pop!_OS and it seems the sound fix does not need to be reproduced again. I also installed Nix with home-manager and got the .desktop files working, although I need to close session each time I install something new with Nix.

Tags: tech, unboxing, system76, review

Pod to Pod Communication with Podman

Podman is becoming a considerable option for deploying containers in a small scale. In my case, as Fedora 32 isn't able to run Docker CE and the guide I was using to get that running didn't work, I had to learn how to stick with this alternative, and honestly, it is similar to Docker, so the learning process wasn't complicated. Also, you don't need root access to perform most of the simple tasks (contrary to Docker).

One cool thing from it is that you are able to set up pods and run containers inside them. Everything inside the pod will be isolated on its own network, and you are also able to open ports to the outside by mapping them to a host port. Generally, you will find lots of guides on how to install a stack on a single pod, but I wanted to go further and see how I perform any kind of communication between pods.

Let's say I want to create a pod for backend stuff (like a database) and another pod for frontend stuff (like an application). We can create a pod for backend containers and set up another pod for frontend containers, where we are going to map a port to the outside.

This example is based on this article. There is a lot of useful information here that you can check. Time to start!

Steps

Remember to run everything as superuser. To generate our network, we need to run the following command:

> podman network create foobar

We can see the generated network here:

> podman network ls

NAME VERSION PLUGINS

podman 0.4.0 bridge,portmap,firewall,tuning

foobar 0.4.0 bridge,portmap,firewall,dnsname

Now, let's generate the pods. As WordPress uses port 80 to show the application, we are going to map it to port 8080 for testing purposes. So, we do this:

> podman pod create --name pod_db --network foobar

> podman pod create --name pod_app --network foobar -p "8080:80"

> podman pod ls

POD ID NAME STATUS CREATED # OF CONTAINERS INFRA ID

fe915374e2cd pod_app Created 9 seconds ago 1 6bd416faab89

ef0b632e857d pod_db Created 14 seconds ago 1 0b6fabe122b6

Finally, let's create the containers for each pod:

> podman run \

-d --restart=always --pod=pod_db \

-e MYSQL_ROOT_PASSWORD="myrootpass" \

-e MYSQL_DATABASE="wp" \

-e MYSQL_USER="wordpress" \

-e MYSQL_PASSWORD="w0rdpr3ss" \

--name=mariadb mariadb

> podman run \

-d --restart=always --pod=pod_app \

-e WORDPRESS_DB_NAME="wp" \

-e WORDPRESS_DB_USER="wordpress" \

-e WORDPRESS_DB_PASSWORD="w0rdpr3ss" \

-e WORDPRESS_DB_HOST="pod_db" \

--name=wordpress wordpress

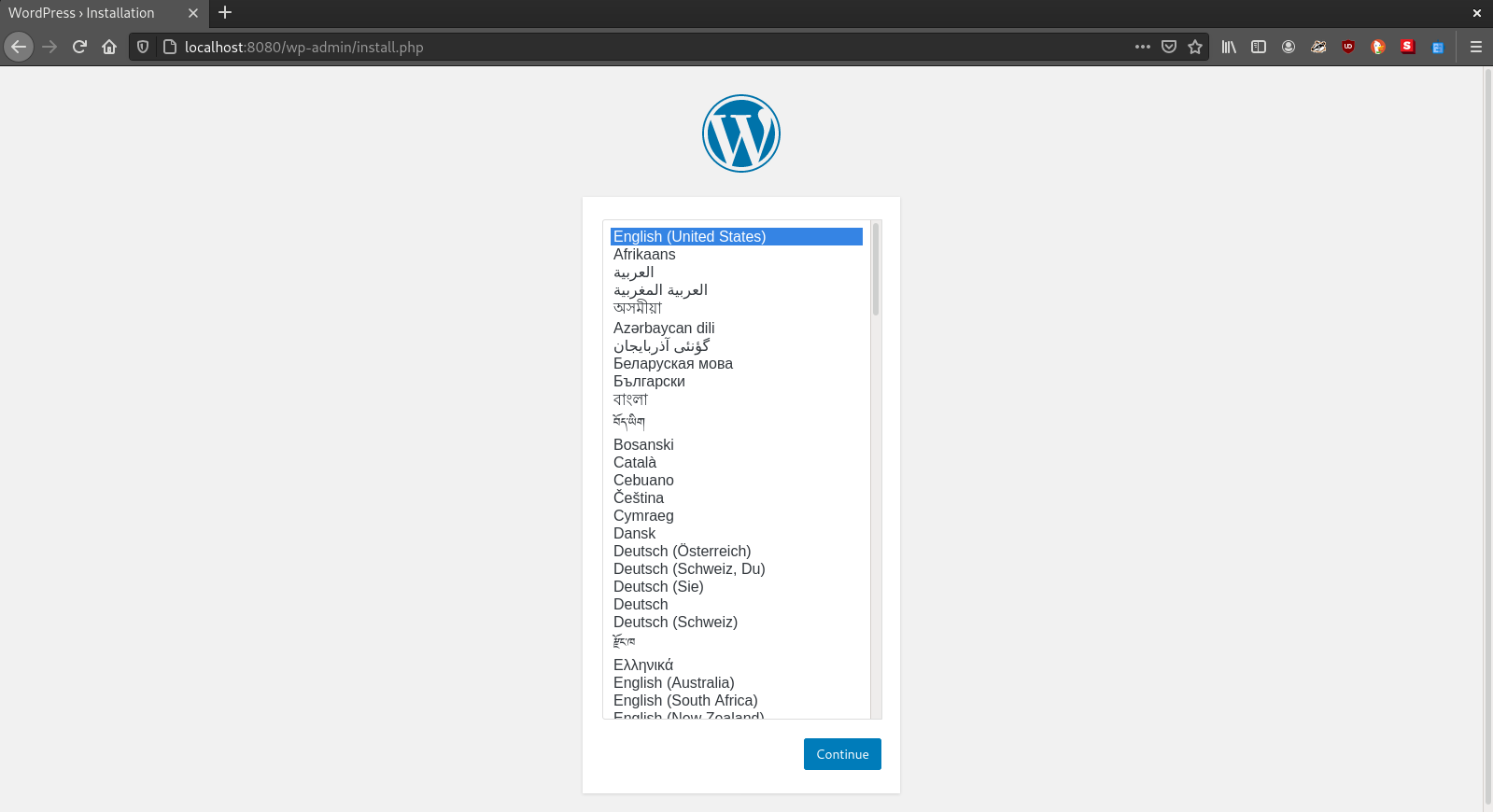

Now, check localhost:8080, and you might be able to see the application:

If you have any questions, feel free to send an email, and also check the Podman and dnsname projects.

Tags: 2020, tech, podman, containers, pod

It seems we came back to normal regarding this COVID thing

This is kind of a COVID-19 post written on my perspective, so it is mandatory to add the disclaimer that this is my opinion and you should take it with a grain of salt. We can have a discussion on my email.

It seems everything came back to normal, as now I'm seeing too much people on the streets. I don't really care, as I got a (now completely) remote job and don't need to get out of the house unless I have to do something outside. And if I have to, I will use a mask (because they look cool and people won't see my face).

Now, I have to tell this: too many people are still romanticizing the situation thinking that we will learn something new from all of this. We didn't and we won't.

There is/are still:

- People still without jobs and suffering to survive

- People thinking that they are the center of the world, so if they don't like something, they should not feel obligated (even if it's a national mandate) to do it because it is their "personal freedom"®

- Even more conspiracy theories flowing on the net

- COVID (that's expected) and a bunch of people dying from it

- And you pretty much know what I can add to this list, but it isn't necessary

All the people saying to prioritize the economy over health, now that they won, seems to have forgotten the ones in poverty even before the pandemic. Well, in the end, as long these people don't work for them, it doesn't really matter, they can die from COVID, poverty or whatever.

All the people that was ordering others to stay at home really ignored the fact that again, there is people who can't do it. They need food to survive, and on our current times, you need money even for vital needs, and that can only be obtained by work (unless the govt. is providing help to them).

Everyone seems to be enclosed in a bubble, in a need of dying it with a popular color so they can be part of its hivemind. And although this sounds like one of these sentences used by people that consider themselves "different than the others", now it seems you have to be part of something to have a valid opinion. If not, you are just a phony, or still an enemy.

I am just going to say it: There is something wrong with our current way of living. People can't live normally, we are fighting on banal topics, there is no common sense on basic considerations in a society. Now everything seems motivated by political intentions.

What I can just say is: Let's acknowledge this and see what we can improve personally (at least).

TL;DR

Whoever thought the world would change after COVID-19, needs some kind of reality check and understand the current battleground (yes, a battleground). But y'know, we can do something in the personal level to solve it. But we must acknowledge it first.

Tags: 2020, rant, covid, personal

You don't really need certifications: A Short Story

Here I come with a rant about something that has become a somewhat unnecessary practice on getting jobs in IT. As a disclaimer, this is a personal opinion and I'm open to discussion, so take it with a grain of salt.

Before working

I was a student that always strived to get the highest score. If I do an analysis of myself since elementary school until the last year of high school, I really liked to show off that I was the best by getting the highest grades. Where I live, the highest score was generally a 100, or a 10 in some cases. Seeing this on a certain perspective, it might feel so rewarding and beautiful to see that your score on something was composed of three digits instead of two, which would be something small and an indicator of failure on something (or everything).

But getting to the point, I wasn't really showing that I learned stuff, I was just competing against my classmates on who has the best score in the room. For my parents, it was an indicator of success. If you got less than a 100, one of the main complaints would be "where did you fail?" or "were you still the highest?". If it was lower than 90, then it would get harsher, like "you need to study more". The "threshold" of approving a course is of 70, so I still had a pretty good grade, but for them, and even other parents with their kids, less than 90 would be something bad. And it was part of my mindset. Less than 90 would give you the huge punishment of my life at the time: Not being able to use the computer for a week, which would mean no World of Warcraft or whatever I was playing at the time.

On the two years before college, I went into a high quality high school, considered one of the best in the country. I went there by personal decision, but with the obvious goal of showing up who would one of the best students in the country. I had my bad grades on the first exams (a 30 on a physics exam was the most painful), but I was able to recover my grades on that semester (getting a 100 on the last exam, which was majestic). I graduated with what is called "honor bachelor", which is passing the national exams we do at last year of high school with grades above 90 on all topics. I got a medal (I don't have it anymore) and a certificate (useful for nothing now), and it was beautiful. Then college came.

College was that punch that knocks you to the ground on the first blow, letting you know that you aren't anything huge, one of the same. Although the whole experience of that high school did help me (at least on the math and writing courses I had), my first semester consisted on two related courses of programming with a professor adept on the "academical terrorism" ideology (torture your students psychologically and only stay with the best), making me ditch the course in the middle. Ah, and that professor dictated his own rules: If you leave the course and tells him through email that you are not coming, he would give you a 65. If not, even if you did homework and such, you would get a 0. Guess who didn't send an email?

Having two 0's on your grades was simply a bummer, but it was what taught me to be humble with grades, and to really try to learn, because that bad experience was a mix of being too cocky and thinking that I was superior. Still, I wouldn't say I learned a lot on the rest of my college life because there were topics that I really disliked or really haven't had a proper teacher that really taught the topic and was just collecting the paycheck (looking at you Algorithms Design). In the end, I only had one course with less than 70, one with a 75 and the rest was between 80 and 100. That helped me in getting scholarships, hence getting my semesters paid, helping my parents in not spending much money besides what I needed monthly. I didn't work because my parents didn't want me to do it (bad decision) and wanted me to graduate quickly. So, I spent 4 and a half years, which is a "good indicator" for an Engineering student.

Working (and the good experience of certifications)

I was pretty worried about all the "threats" I received prior to my first experience working. "Working is way different than college", or "nothing you learn on college will be useful on real life" were the most menacing and I really thought I was an academic guy (I wanted to be a teacher) and wouldn't have a good experience on my internship. In the end, it was completely different, I enjoyed the experience and loved the idea of going to work every day. I lived more than 50km away from my workplace at the time and I still enjoyed going there, because I felt useful and learned a lot of stuff (Plus, I was getting paid). And well, what I learned in college did help me in performing a more cleaner job, something easy to document for future reference (ex-coworkers still tell me this, which makes me slightly blush). So take that.

I didn't go with any certificates at the time because living far away consumed a lot of time. Besides, I had a small part-time job that was paying in dollars, which is a valuable currency and relatively expensive to get here. But seeing coworkers getting certificated, plus my workplace motivating people to get certified in the cloud was my motivation to go back into studying. After moving to a place closer to my workplace, I started getting prepared for my first certification, AWS Cloud Practitioner. I wanted to go with the Solutions Architect Associate first, but it was too expensive and didn't want to lose it. I was nervous, but passed the exam without problems.

Few months later, I decided to get into the Solutions Architect Associate. This time I felt confident: Spent on a yearly platform subscription (Linux Academy) and the guy who did the course (Adrian Cantrill, which now has his own platform) is one of the best. I felt that I learned a lot after watching all of the videos, and felt that I really was able to handle almost any kind of situation related to designing on AWS. Did the exam and got a very nice score.

On the same month, I got an email from GIAC that I was chosen to do the beta exam for a certification they wanted to make available in a few months, and for free. Spent my time studying on the bus and also passed it. Finally, I started practicing for the Certified Kubernetes Administrator, even without any prior experience with Kubernetes. I had my sweat for that certification, and I was really worried on getting a good grade or at least passing it. But even if I didn't pass, I would feel proud of learning a lot about how to handle it. In the end, I just missed two 2-point questions, but got a nice grade.

The bad experience with a single certification

Here is where the purpose of this post comes. So, on this year, I was on another job completely unrelated to my previous work experience. At the beginning, I was enjoying what I was doing, but as time passed, it became boring, presented no challenge, and some of the stuff I was doing, I wasn't able to even automate because the higher-ups didn't want to listen and wanted to keep with their solutions. I was looking for jobs, and had the luck of having an ex-coworker that referred me for a Cloud Engineer position at a company he is working as a manager. The only thing that I was lacking, but seemed easy to get with my previous experience, was Azure knowledge. So I booked an Azure Fundamentals exam (for free) and put my effort for the exam. He even asked me to get an Azure Administrator certification, but honestly I was focused on the CKA at the time, so the condition was to ensure this was necessary to get the position, so I could reschedule the CKA and focus on the Azure exam. I got no response, and glad I didn't, so I kept working on the CKA.

Here come the problems: As we are on the COVID-19 situation, exam centers are closed, so you had to do this at home. It wouldn't be a problem as I did the CKA at home without any issues, only had to get a decent webcam and use Google Chrome (totally tolerable). For this one, you must have Windows or Mac to run their software for testing (OnVUE). This is a huge red flag for me as my personal computer has Fedora, and I don't have money nor interest in purchasing a Mac device or a Windows ISO. At least I had my work computer lent by the company, which ran Windows, but it is completely restricted of doing any changes, so probably the firewall would give issues. If I ran the system check on that computer, the software would indicate everything worked as expected. But on the day of the exam, guess what, I had issues (no connection). I got frustrated, so threw a ticket to OnVUE support to see if they could reinstate the voucher used for the exam, which they did. I rescheduled the exam for the next two months.

During these two months, I wasn't accepted on that position my ex-coworker referred me to, but I did found another job as a Cloud Engineer on a small company. The CEO was my ex-boss on the first gig, and he was looking for someone able to help him with applying the whole DevOps practices on his software development company. He trusted my capabilities and I enjoyed working with him during that time, so it was an awesome proposal (although it has some drawbacks that I might mention in another post). The whole focus is on AWS, and as a small company, at least I don't see the necessity of going to Azure or GCP.

Getting back into the Azure Fundamentals exam, I didn't study at all for this second time. I just skimmed the same exam from Linux Academy, but I had in mind that getting that certificate wouldn't help me on anything, just having something on the CV that wasn't even valuable. In the end, I did the exam and didn't pass. But I felt nothing. Like, even if I passed, I would only go into that typical LinkedIn brag about "You got a new badge" and such. But at what benefit? My current workplace wouldn't even care (we don't use Azure) and it wouldn't reflect any knowledge on the platform (and I might say I can still use Azure, because it shouldn't be that different than AWS). Also, I didn't pay for it, so it didn't hurt. Sure, it was free and a good opportunity, but honestly, is like getting free apple juice, but you don't really enjoy it or like it.

Reflexion (and the reality)

Being part of water-cooler conversation groups with professionals of the area, one idea they all agree is: Certifications are only for CVs, they might not show any real-life knowledge. And to be honest, I agree with them. I took my AWS certifications because my previous workplace was "rewarding" people getting these certifications (they only paid what I spent on the exam, the salary and the opportunities of better experiences never came), I took the GCSA because it is a too expensive certification and it would look cool (what is doing the difference is the knowledge obtained from the SANS course I took that let me get this certification) and the reason I took the CKA was because there was a combo of course+certificate on Black Friday, although I have to admit I loved the on-hands certification approach compared than consuming a book/watching slides and vomiting it on a single/multiple choice exam.

And I have to admit, part of my goal of certifying was simply trying to look as the best kid of the kind. LinkedIn (or any other social media) helps you on bragging that stuff and giving you that pleasant dosage of dopamine, just to show up that you are a "successful" person. Certifications, most of the time, are not indicators of success or knowledge, neither grades or scores. What really matters is: can you handle it in real life at any time? If you can't, don't worry, you can still learn it, it is a matter of practice.

Unfortunately, to land into a new job these days, it seems that the HR filter consists on checking what certifications you currently have. I don't really know if they really look at your experience at all. In the end, for the job opportunities I was able to get (including the transition from the internship to a full-time job), they were mostly because they know I'm a competent person on what I do and looked friendly, able to cope with the team I was going to interact with. They didn't care about my certifications. I can't do anything to change HR, but maybe, what I can do, or even you as an interviewer, is to really listen to the people that you are interviewing. You should really know the person you are going to work with, and two pages are not a good summary of that.

Finally, I can really think that I'm not the only one who did certifications with the single purpose of passing. What matters is what you learn from that, but honestly, having a certification or not might not make a difference on your professional level. How many people without a certification can really do a better task than those one certificated? I don't have the numbers, but I know people that match this description. They are still huge professionals, able to learn whatever they propose to learn, and do an awesome job, without spending hundreds or thousands on an exam that would state they really know how to do it. I can really see it, not difficult to imagine it at all.

Conclusion

Don't spend money on certifications with the purpose of having something on your CV. Only do it because you really want to learn something. If you need to demonstrate to a place that you know a topic by getting a certification, you should see it as a red flag, because this is not a good way to find talent or someone capable on knowing about the topic. Here is where the interviewing process with the technical people should evaluate your capabilities, not three lines on your CV saying you passed an exam. There might be exceptions, obviously, but the only tip I can provide to you (and even to my future self) is just learn and apply. That is what really matters.

What I would say, if you are in college or just passing high school and want to get into IT, is to get professional experience. Like I said, I didn't have any previous work experience before my professional internship, but I know people that is not even in college and are doing internships for learning. Internet is the best source to learn stuff, but a job is where what you learned will be applied, which means more learning and acquiring experience.

Tags: 2020, tech, certifications, personal, rant

Using the NGINX container as a reverse proxy

In this post, we will do the following:

- Generate a container stack (set of containers that will interact between themselves on their network) where a reverse proxy (in this case NGINX) will handle the traffic.

- Create self-signed certificates (This is an example. Please try to generate certificates signed by trusted certificate authorities like Let's Encrypt)

- Configure NGINX to:

- Listen on port 80 and 443

- Redirect traffic from port 80 to port 443

- Configure the listener on port 443 to use the generated certificates and handle traffic from outside to the JIRA container on port 8080`

Introduction

Recently I have been dealing a lot with the usage of containers to avoid writing huge scripts to deploy stuff that is not intended to run for a long time. For example, I was asked to deploy a test JIRA Core (specifying the Core part as now JIRA has three new modalities) because there were some changes in the API. I followed the guide from Atlassian, but honestly there were too many instructions to set up and the requester needed that server as soon as possible.

With containers, it is simply a task of installing your preferred container engine (See the famous Docker, RKT, cri-o, Podman and so on), grab an image from a registry online (famous cases are Dockerhub or Quay.io) or develop one yourself if you are up to the task, and run an instance of that image with the necessary settings. Also, there are orchestrators (Kubernetes, Docker Swarm, Apache Mesos, and so on) where you indicate a stack of containers you wish to deploy and they will handle the whole lifecycle and management of the stack. In my case, I go into that path if the application is intended to stay longer than usual (or permanently). If it is something quick, then I use Docker Compose as a stack testing tool or a stack intended to stay for a shorter period into a testing server.

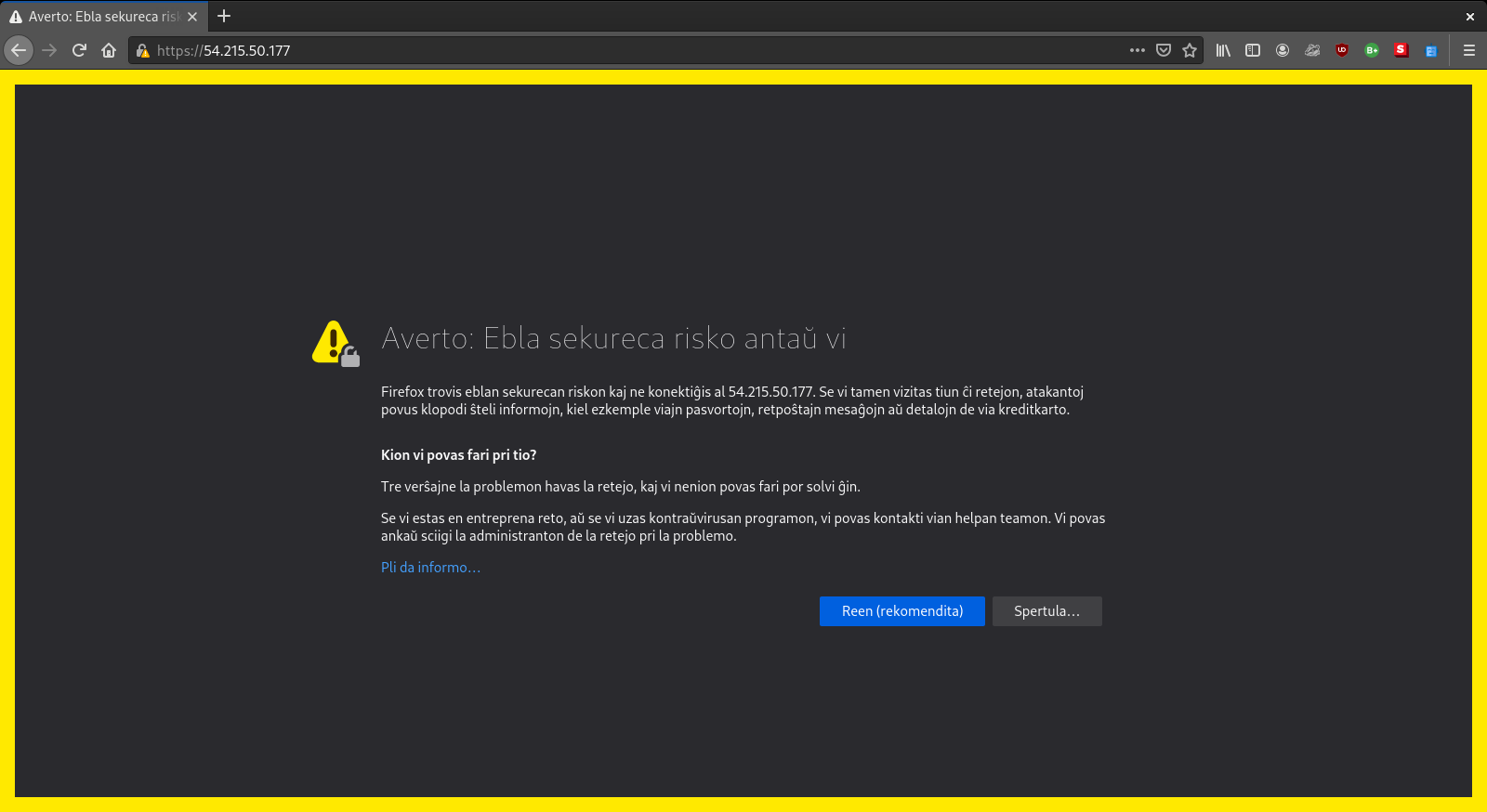

In this specific case with the JIRA server, I got the compose specs from this repo ran into Docker Compose, checked the logs and then it was a matter of tuning the server (in the case of the cloud, finding the instance type that supported running this application without crashing, which should be one with more than 2Gb). But coming from a security team, well, the original stack was opening port 80, which although it works, it is not secure. Securing your application for public access must be done always. Here is where NGINX comes.

I won't bring you details about NGINX because I'm no specialist at it, but in summary, it can be used as a reverse proxy where it takes requests and forward them to servers that can be in internal networks. This is all we need here: On our stack network, we open ports 80/443 in favor of NGINX instead of JIRA, and we configure NGINX to forwad all requests to the JIRA container, which will be open on port 8080 as default (see the docker-compose file from the previous link).

As this guide won't fall into too many details (again, I'm not a specialist at NGINX), we will generate the following folder structure:

jira

|_docker-compose.yml # Using the one from github.com/dockeratlteam/jira

|_nginx

|_certs

|_ca # Certificate Authority

|_jira # Certificates for server

|_config # NGINX conf.d files

Generating self-signed SSL certificates

Again, this is an example. Please use trusted certificate authorities to sign your certificates. I decided to go that path so you can have an understanding on how to create them.

First, let's go to the nginx/certs/ca folder. We will first generate the Certificate Authorities, by first generating a key, then a certificate signing request (a request to the CA to obtain certificates), and finally the certificate:

openssl genrsa -out ca.key 2048 # Generating a 2048-bit key

openssl req -new -key ca.key -subj "/CN=own-ca" -out ca.csr # Generating Certificate Signing Request with key generated previously

openssl x509 -req -in ca.csr -signkey ca.key -out ca.crt # Generating the certificate

Now, we are going to generate the certificates we want on our server, signed by the previously created CA. The commands are pretty much similar. Move to nginx/certs/jira (or ../jira):

openssl genrsa -out jira.key 2048

openssl req -new -key jira.key -subj "/CN=jira" -out jira.csr

openssl x509 -req -in jira.csr -CA ../ca/ca.crt -CAkey ../ca/ca.key -out jira.crt -CAcreateserial # Creating the certificate by specifying the CA keys

Setting up the NGINX listeners

Generally, the default configuration file for NGINX, located at /etc/nginx/conf.d/default.conf inside the container, has the following settings (I removed the comments from it as we don't need them):

server {

# Listens on port 80 for server localhost

listen 80;

server_name localhost;

# Where the root path is located

location / {

root /usr/share/nginx/html;

index index.html index.htm;

}

# How to deal with server errors

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root /usr/share/nginx/html;

}

}

Here, what we want to do is set up the following: - Setting up the listener at port 80 to redirect all traffic to port 443. This is to avoid anyone of entering port 80. - Setting up the listener at port 443, indicating the certificates to use and where to redirect our traffic

Go to nginx/config, create any file with the .conf suffix on its name (NGINX won't care about the name as long it has that suffix) and let's write the following:

# Proxying traffic from a certain server, in this case, the JIRA container

upstream jira {

server jira:8080; # On the docker-compose.yml, the JIRA container is named jira.

}

server {

listen 80;

# For any request, redirect to this URL

return 301 https://$server_name$request_uri;

}

server {

listen 443 ssl;

ssl_certificate /etc/ssl/jira.crt;

ssl_certificate_key /etc/ssl/jira.key;

location / {

# NGINX will do the reverse proxy to the upstream jira settings specified above.

proxy_pass "http://jira"

}

}

Setting up the docker-compose file

Alright, now what we are going to do is the modify the JIRA container settings on that docker-compose file and add our NGINX container specs:

For the JIRA settings, remove (or comment) the port settings. They look like this:

services:

jira:

...

ports:

- '80:8080'

...

A notice for this specific case is that you might need to add some settings to the JIRA container so it can work successfully with the reverse proxy. I won't post how as this is not the intention.

Now, add the nginx specs on the services list:

services:

...

nginx:

image: nginx:latest # Use another tag if stack is going to production

restart: always # JIRA will take a while to load. This will help

networks:

- jiranet # Same network being used on jira and postgresql containers

ports:

- '80:80'

- '443:443'

volumes:

- "./nginx/config/:/etc/nginx/conf.d/" # Mapping our conf file

- "./nginx/certs/jira/:/etc/ssl/" # Mapping our ssl certs

...

And now, we should be ready to run the docker-compose up command (if using an orchestrator, the recommended commands for it).

This is what we might see after a while when JIRA has set up the database:

Observations

- If you want to get deeper into customizing your NGINX container, one good place to look at NGINX settings is at

/etc/nginx/nginx.confinside the container. On the include part, it indicates that it will read files from/etc/nginx/conf.d/*.conf. You can create separate file for the listeners and you might get the same result, but in an ordered manner (like 80.conf and 443.conf). - Although we used Docker Compose for this, the container settings can be pretty much be used elsewhere as long the NGINX container is able to contact the JIRA container (or any other container) with the DNS name specified (in this case, jira) or IP (not recommended though as they might change when a container is destroyed). This means they should be on the same network.

Tags: 2020, tech, containers, nginx

NAT Instances: Why use the first option on the Marketplace?

So, today I was trying to generate some kind of architecture on AWS through Terraform where a NAT instance was needed for a private subnet that needed internet connection for updating packages. Honestly, I could use a NAT gateway for this, but I wanted to go the extra mile and brush off my knowledge on designing VPC architecture on AWS. I remember doing that when practicing for the AWS Solutions Architect Associate exam, and it has been a long time without applying any cloud knowledge until now that I have a cloud engineering job. Plus, Terraform is a very cool tool, so I wanted to get the grip on best practices and such.

When I tried to follow some of the guides on how to do that (see the official guide from Amazon or an A Cloud Guru video on creating VPCs), I felt confident it was going to work in the end. Unfortunately, what happened is that my instances in the private subnet weren't able to ping anywhere (not even to the instances in the public subnet, even with an explicit security group allowing ICMP traffic to them) and I had a good time doubting my capacities on reading and watching tutorials (which is a good trigger for your daily dosage of imposter syndrome).

Gladly, and I really thank the author of the guide, I was able to find this. After applying the indicated commands (in summary, enabling IP forwarding on sysctl and creating a rule on IPTables), I was finally able to get my instances on the private subnet to contact the internet. I knew my settings were working, because if not, not even AWS was providing a working guide, which would be horrible. But then the question came. What was I using that was out of the formula?

And I have the answer. When I was choosing the AMI to be used as a NAT Instance, I decided to go with the most updated version. Not necessarily it was the first option on the list of Community AMIs, but you know, the name had 2020 on it. So, this time, I had recreated it with the first option on the list, which seems to be an option from 2018. Well, after deploying and being able to do a SSH jump to the instance on the private subnet, it was completely able to ping and update without any issues. Also, if I remember the video on A Cloud Guru, Ryan used the first option on the list, which was an image of 2018 (maybe the same one, not sure though). He didn't choose it specifically, it was only the first on the list.

So here it goes my friends, maybe this post won't have a direct answer to the question, but here is where I would say that it is the best to try to work with something that works first, so you can have a checkpoint and be able to tweak your settings later. And in case something doesn't work, well, you have a usable checkpoint, so just rollback to it.

I plan to upload a simple guide to Terraform soon, at least with the basics on how to generate an instance, setting up your user and generate a random password in a dynamic way.

Tags: 2020, tech, aws, nat-instances, vpc, architecture

Introduction to this blog

This blog only came to existance because I was testing this project.

So, right now I'm only testing a container with this program and seeing if it works as expected :). But I plan to post stuff here (or I hope so).

If you want to see the Dockerfile for this program, please check here.

Tags: 2020, hello-world, introduction, first-post